What is prompt programming and why is it one of the most valuable skill in the world of AI-generated images?

Image from my Instagram post

Disclaimer: I am not an expert on any of the topics I shall discuss below, I am just curious and I like to experiment + learn. I’m using this platform to log my thoughts, ideas and whatever else comes to mind. Please take what I write with a pinch of salt, and always do your own research. I am not a very good writer either, so be prepared to be reading a lot of fluff.

Anything I write that might be wrong, please do correct me but be polite about it, thank you!

PS: Read my experiments with prompt engineering and non-English languages here

Date: Friday 24/06/22

So now that Dall-E mini has exploded on the internet, it’s time to look into what makes it so unique, and how we can push the limits of this uber damn cool tool.

My journey with AI-generated images is spotty.

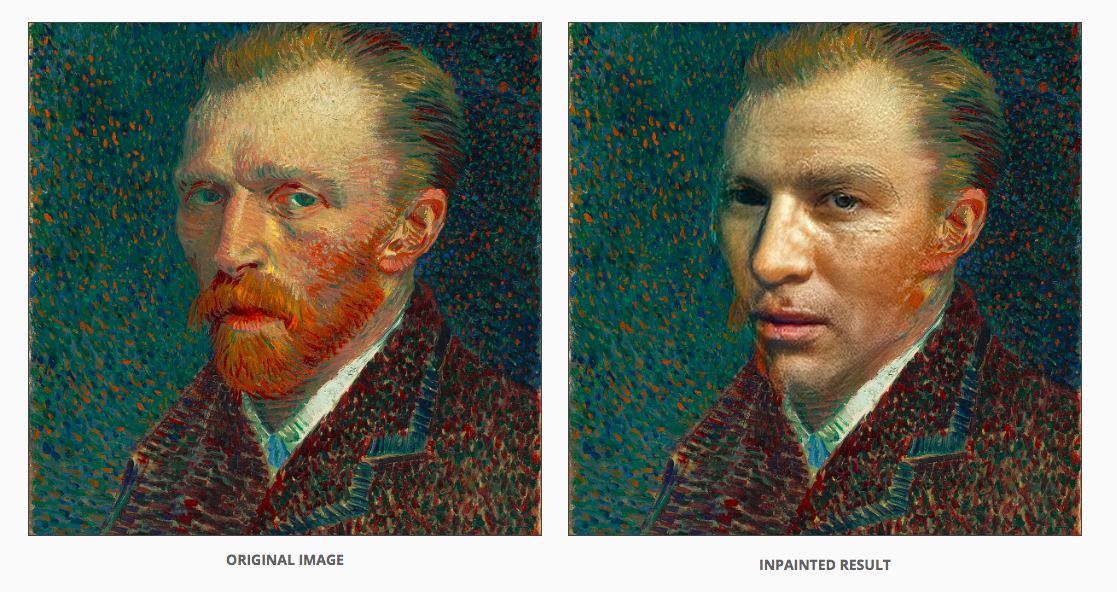

Back in 2019 and 2020, I was experimenting with no-code generators such as that weird drawing to cat generator (pix2pix), NVIDIA’s Image InPainting and of course ArtBreeder!

I had no idea what was happening, but I was enjoying generating images tremendously. At this point, I had limited knowledge of AI from university, I only knew about chatbots and data biases and only talked about it on a philosophical level, for example Feminist Internet taught me through a chatbot workshop that gendered AIs, specifically PIAs (so bots like Tay on Twitter, Siri on Apple or Alexa on Amazon), if left unchecked, could lead to problematic consequences such as reinforcing negative stereotypes of women.

my test with van gogh

![]()

my test with king of the hill

In third year, I was part of this research group called Interpolate where we talked about all the points within digital and analogue, and we got really deep when talking about chatbots. We looked into how it affected natural language and co-programmed a chatbot using Rivescript and JS and throughout the whole process, I really got to understand the principle of ‘coding’ dialogue, and how our perceptions of natural language, what differentiate a human from a machine really shone through. Coding in ‘natural bugs’ and ‘slip ups’ to create a chatbot that was closer to the human language was fascinating, it meant decoding our own bus, breaking down the flaws of our own language to understand where our own bugs were coming from. We even presented it at the 2019 IAM (Internet Age Media Conference) in Barcelona and ran a chatbot workshop too.

screenshot of our Interpolate chatbot, with the script I coded

Image from our workshop at IAM in Barcelona

Anyways, after graduating at the end of 2019, I decided to sharpen my knowledge and open up the black box with UAL CCI x Future Learn’s Introduction to Creative AI course, where I got to learn the basics of machine + deep learning. After that, I pretty much only taught the basics of what I already knew to my third year students at BA Graphic Design at Sheffield Hallam University (hello guys! yall are great), got really invested in the concept of deepfakes (even ran two workshops about deepfakes, one with Identity 2.0 and another one (Envisions) for Feminist Internet and Goethe Institute and co-authored a paper) but never really pushed my actual design experiments, which was a shame!

So fast forward to 2022 and Dall-E mini + Dall-E 2 EXPLODED! I started seeing it everywhere, I knew this was going to become the new NFTs and I was so angry at Dall-e 2 for not accepting my waitlist invite, I tried joining back in 2021 and was nowhere close to be in the game yet. So out of spite, I started scouring the Internet for an alternative, and lo and behold, I found the wonderful MindsEye Beta by multimodal art, and of course, Dall-e mini.

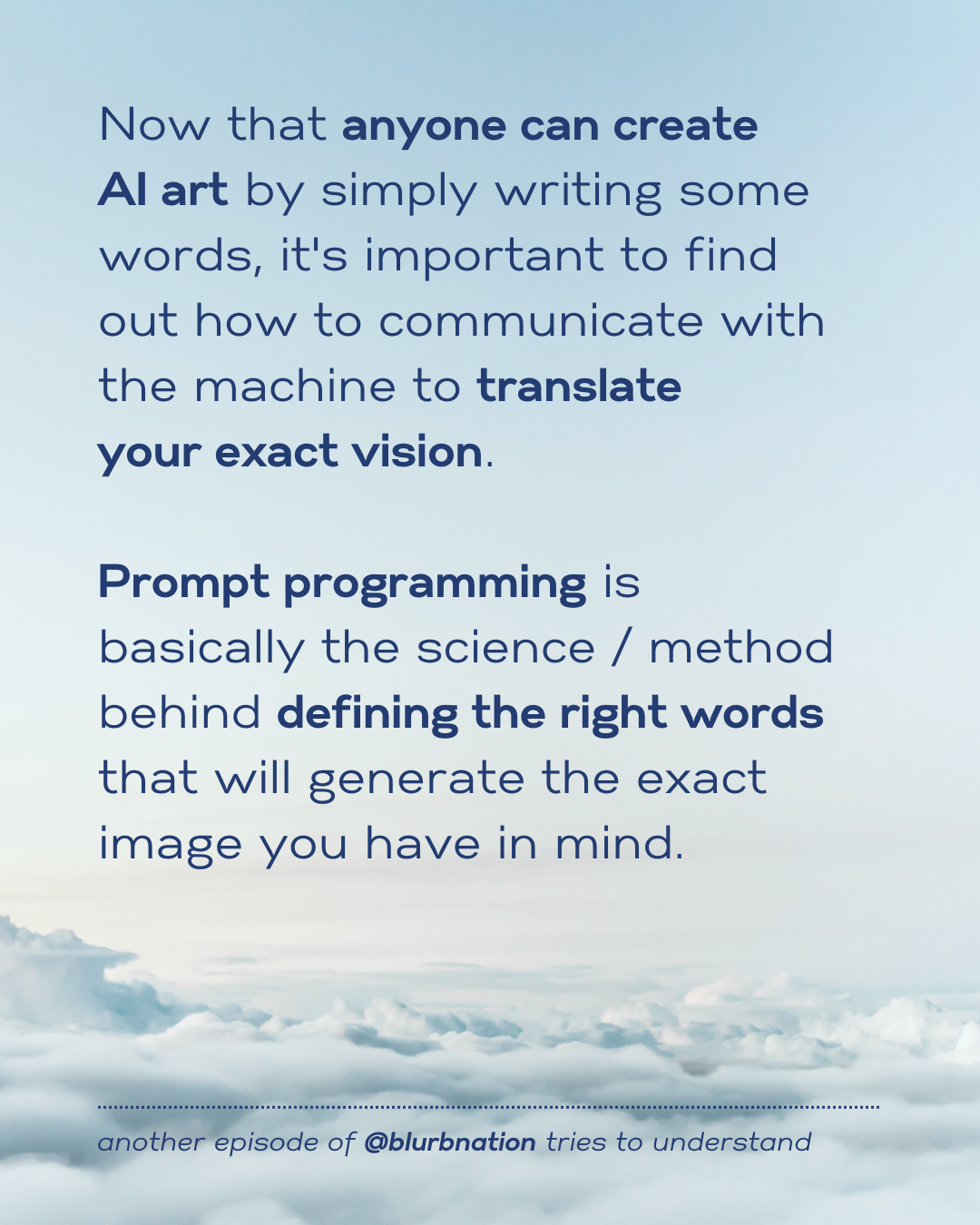

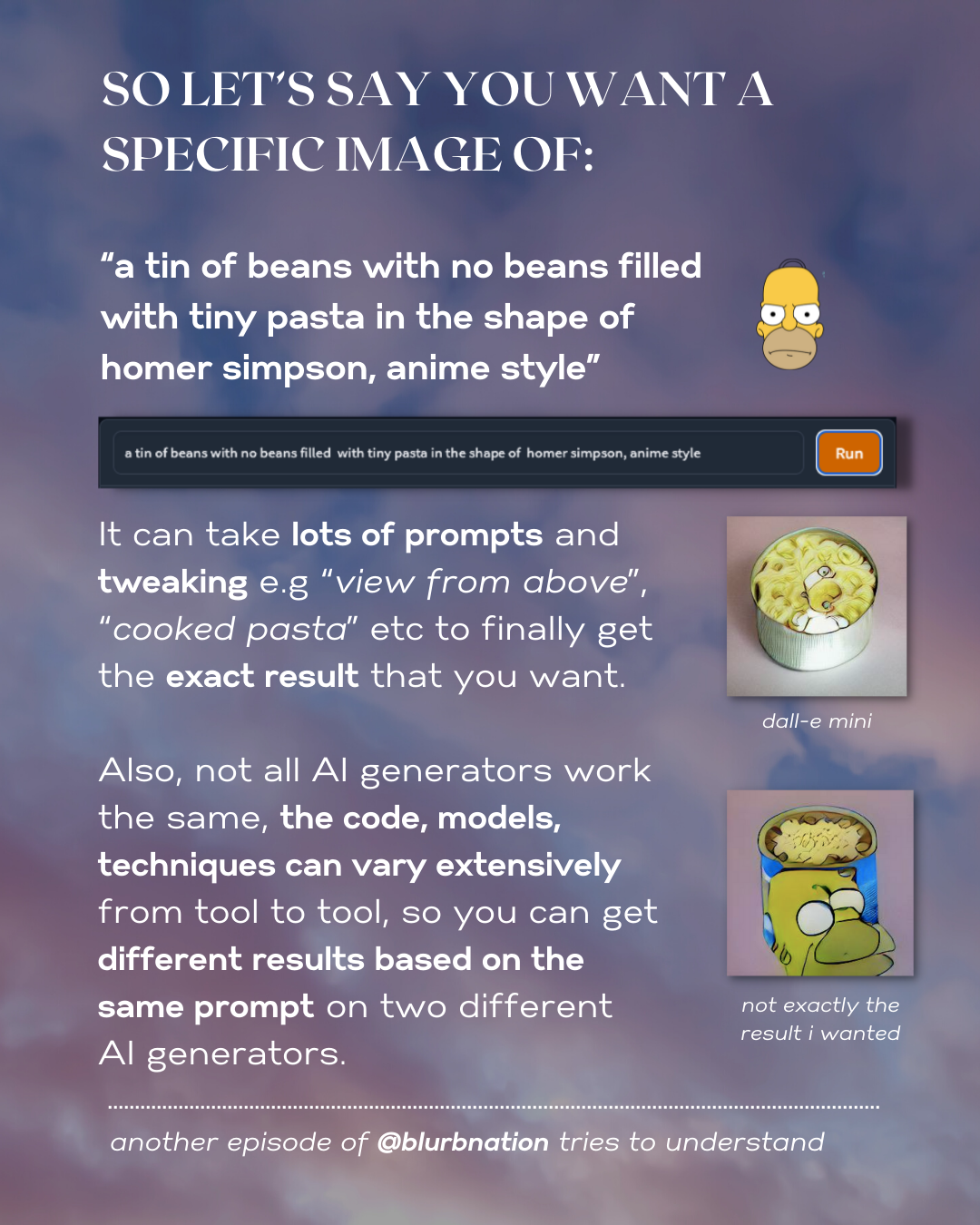

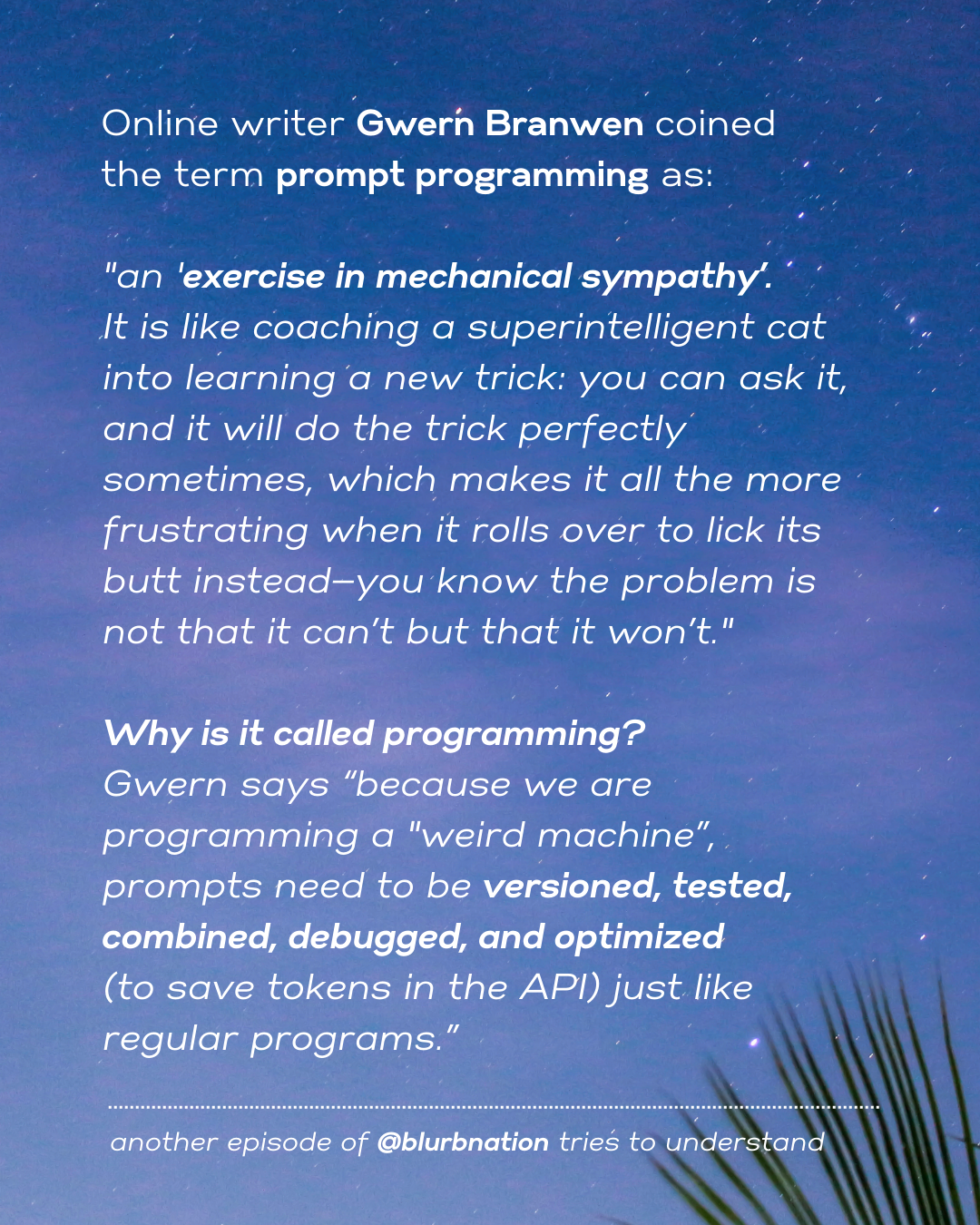

When you first try out MindsEye Beta, they link you to this great article by Matthew McAteer’s blog on CLIP prompt engineering for generative art and how to refine them, and through that, I did a deepdive and found Gwern Branwern’s original article which coined the term back in 2020 and a reddit post about ‘The Problem of Prompt Engineering’. So here below, is my breakdown of this important concept, in the formal of a carousel instagram post:

QUESTIONS I HAVE AFTER MAKING THIS POST:

- So does that mean that if I’m rubbish at expression myself, this will affect my generated images? Should I read more books and expand both my vocabulary and my syntax?

- What about slang? How good is its slang? What about other evolving languages like algo-speak such as ‘unalive’ and ‘seggs’ ?

- Does this mean that the engineers who trained the GPT-3 model have an excellent grasp in English? And they all have the exact SAME grasp of language too?

- What about other languages like French and Vietnamese? Will the model have been trained on data based on the internet? Since the internet has content written in majority in English, does that mean it won’t understand French and Vietnamese slang as well?

- What about non-Latin languages such as Arabic and Hindi? Will it understand it?

- If its database is based mostly on the internet, it should pick up on it, right?

- So does the model generate a comprehensive understanding of a group of other humans’ comprehensive understanding of visual things?

- Why can’t AI-generators produce clear images of words on things based on words its given, or have I just been using it wrong this whole time and I’m just crap at prompt programming?

- If using other languages, does it translate it?

- What if there is no prompt? https://restofworld.org/2022/dall-e-mini-women-in-saris/