Integrating AI-generated images and DALL-E 2 in AR Instagram filters using Spark AR

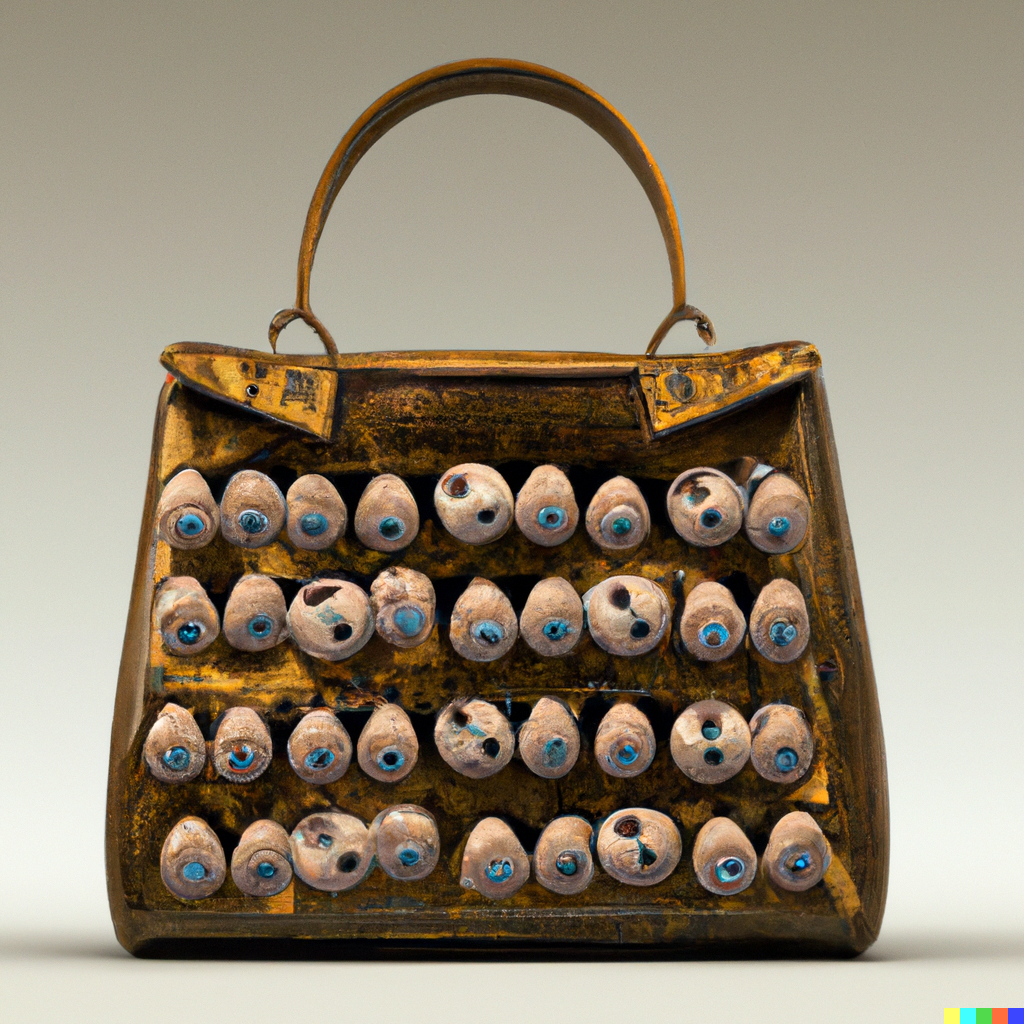

A screenshot of my AR filter: DALL-E / AI bags

Disclaimer: I am not an expert on any of the topics I shall discuss below, I am just curious and I like to experiment + learn. I’m using this platform to log my thoughts, ideas and whatever else comes to mind. Please take what I write with a pinch of salt, and always do your own research. I am not a very good writer either, so be prepared to be reading a lot of fluff.

Anything I write that might be wrong, please do correct me but be polite about it, thank you!

Date: Friday 15/07/22

Go back to the blog: Maria tries to understand

One of the hardest things about experimenting with a new tool is finding the right context in which to use it. The second hardest thing is finding a way to integrate it within your existing processes as a designer / artist.

This is my second experiment where I merged AI and AR together. This time I decided to create an AR filter using Spark AR software after generating a series of AR bags.

As AI fashion was on the rise and I suck at fashion, I realised that this tool would be super helpful to allow me to explore digital fashion without technical barriers. Inspired by one of my best friends who is a fashion designer, I started generated a series of AI bags on DALL-E 2 using a mix of insects and fossils as prompts!

Some are transparent because I had to remove the background for the filters lol

And then I was stuck. I didn’t know how to bring these bags into my workflow. Since I started re-learning / developing my skills in Spark and wanted to learn how to create shaders and SDFs (signed distance fields) to be able to have low storage procedural textures as opposed to rely on PNGs with high file sizes, I had this idea of a world effect that would show the AI bags coming into our real life world through a portal in the ground, Stranger Things style.

a very basic sketch

So I started planning the effect:

1. Start with the Spark template of a 3D object coming out of the ground

2. Use this tutorial for SDFs

3. Use the AR Library for patches & blocks - do not reinvent the wheel

4. Simply add the bags as planes

5. Test, test , test

The making bit

One of the most annoying things about having a crappy old iPhone 6S is that a lot of the cutting edge technologies you want to use often isn’t compatible. So a lot of world effects are dodgy on my phone and the ‘pop out of the ground’ animation often starts very off and you always have to reposition your tracker. So step 5 was essential in my case, since whatever was shown on the preview window often wasn’t the reality on my phone. Also my framerate sucked BAD!

In the preview (left) vs on my phone (right)

As I was working on the filter, I decided to add some sound to add to the immersive qualities of my filter. Inspired by Stranger Things, I made the portal red with red procedural textures and created my own audio piece using a mix of 8 different Facebook sounds which I then mixed using an Audio Mixer tutorial. This actually took the longest time as I’ve never done this before, and I didn’t understand what ‘gain’ and ‘noise’ was so I played around with a lot of values to grasp each setting and get the result that I wanted.

Though I didn’t manage to slow down the sounds though :(

My patch editor became like one of those huge patch graphs I always see on the Spark AR Community lol. Am I finally one of yous?

My patch editor

Presentation: one of my biggest challenges

Anyways, once I was done the next challenge was to figure out how to present the filters.

One of my biggest design challenges is figuring out how to bring gravitas through the presentation of a creative output, and one of the best ways to bring it back to the real world and add to the effect itself, is to find the best environment to film the filter in.

This is SO important, this adds so much value to the concept of the idea and when presenting a filter on Instagram, I gotta make it pop, I gotta make it look like it wasn’t made in my bedroom but made in a sleek studio (lol). This can often be the make-it or break-it moment for a filter, and the presentation will affect the insights of your filter.

So I brainstormed some environments which would suit my AI bag portal and I figured that I could do a two-birds-one-stone: film the filter in a luxury bag shop. By doing this, I would be choosing an environment that is already curated and decorated in the sleekest of ways that is literally adapted for my product. Luxury bag shops like Balenciaga and Burberry already have the display for high-end bags, and if I shoot my filter in those stores and post them on my Instagram, maybe they would be inspired to actually create their own AI bags with crazy designs, who knows!

I don’t know enough about fashion to know which bag would suit which, so I settled with Harrods since you can find a collection of high fashion brands like Dior, Prada, Celine, YSL, Balenciaga instead of going to each of those individual shops and be oggled at.

Also I just want to interject that filming with my crappy iPhone 6s was super difficult because screen recording didnt work at all when the filters were on, the screen would go black and I would get errors all over the place (it worked fine when the filters were not on) so I simply had to record each bag one by one, instead of recording all of them in one go. In hindsight that was actually a better idea anyways!

And here is what it looks like!

NEXT TIME:

I want to find a way to 3D render the bags and figure out how to slow sound down.

Also more SDFs tutorials!